In this tutorial, we will show you how to use Neocortix Cloud Services Scalable Compute to run a large load test with potentially hundreds of devices using the Locust load testing package, using the

runDistributedLoadtest.py

command.

Preparing the Master Node

We assume you have already installed our git repository at

https://github.com/neocortix/ncscli.git on the machine that you will use as the Locust Master, and installed the python packages and ansible, according to the instructions "Preparing the Master Node" in the

Locust Load Test Introduction

tutorial. Note: this procedure was tested on newly-created VMs with 8 GB RAM, running Ubuntu 18.04.

runDistributedLoadtest.py

In that repository in the subdirectory

~/ncscli/examples/loadtest

you will find the

runDistributedLoadtest.py

command. Its –help option says:

usage: runDistributedLoadtest.py [-h] --authToken AUTHTOKEN

[--altTargetHostUrl ALTTARGETHOSTURL]

[--filter FILTER] [--launch LAUNCH]

[--nWorkers NWORKERS]

[--rampUpRate RAMPUPRATE]

[--sshClientKeyName SSHCLIENTKEYNAME]

[--startPort STARTPORT]

[--targetUris [TARGETURIS [TARGETURIS ...]]]

[--usersPerWorker USERSPERWORKER]

[--startTimeLimit STARTTIMELIMIT]

[--susTime SUSTIME]

[--reqMsprMean REQMSPRMEAN] [--testId TESTID]

victimHostUrl masterHost

positional arguments:

victimHostUrl url of the host to target as victim

masterHost hostname or ip addr of the Locust master

optional arguments:

-h, --help

show this help message and exit

--authToken AUTHTOKEN

the NCS authorization token to use (default: None)

--altTargetHostUrl ALTTARGETHOSTURL

an alternative target host URL for comparison (default: None)

--filter FILTER

json to filter instances for launch (default: None)

--launch LAUNCH

to launch and terminate instances (default: True)

--nWorkers NWORKERS

the # of worker instances to launch (or zero for all available) (default: 1)

--rampUpRate RAMPUPRATE

# of simulated users to start per second (overall) (default: 0)

--sshClientKeyName SSHCLIENTKEYNAME

the name of the uploaded ssh client key to use (default is random) (default: None)

--startPort STARTPORT

a starting port number to listen on (default: 30000)

--targetUris [TARGETURIS [TARGETURIS ...]]

list of URIs to target (default: None)

--usersPerWorker USERSPERWORKER

# of simulated users per worker (default: 35)

--startTimeLimit STARTTIMELIMIT

time to wait for startup of workers (in seconds) (default: 30)

--susTime SUSTIME

time to sustain the test after startup (in seconds) (default: 10)

--reqMsprMean REQMSPRMEAN

required ms per response (default: 1000)

--testId TESTID

to identify this test (default: None)

Example Command

This example command essentially does our large daily test, launching all available nodes (usually around 800), installing all prerequisites,

installing the Locust client onto them, starting the Locust workers, running the load test for 4 minutes, retrieving the logs from the workers,

shutting down the workers, terminating the instances, and formatting the final report:

./runDistributedLoadtest.py http://yourTargetURL.com 54.70.xxx.xxx @myAuthToken \

--nWorkers 20 --startTimeLimit 90 --susTime 240 --usersPerWorker 35 > runDist_out.txt

Please note,

54.70.xxx.xxx

in the above example is the IP address of the Locust master.

The master host is the machine you are running these commands on. It must be accessible from outside whatever firewall you are behind, by that IP address or hostname. That’s how the workers communicate with the master. Please substitute the correct IP address or hostname for your host master.

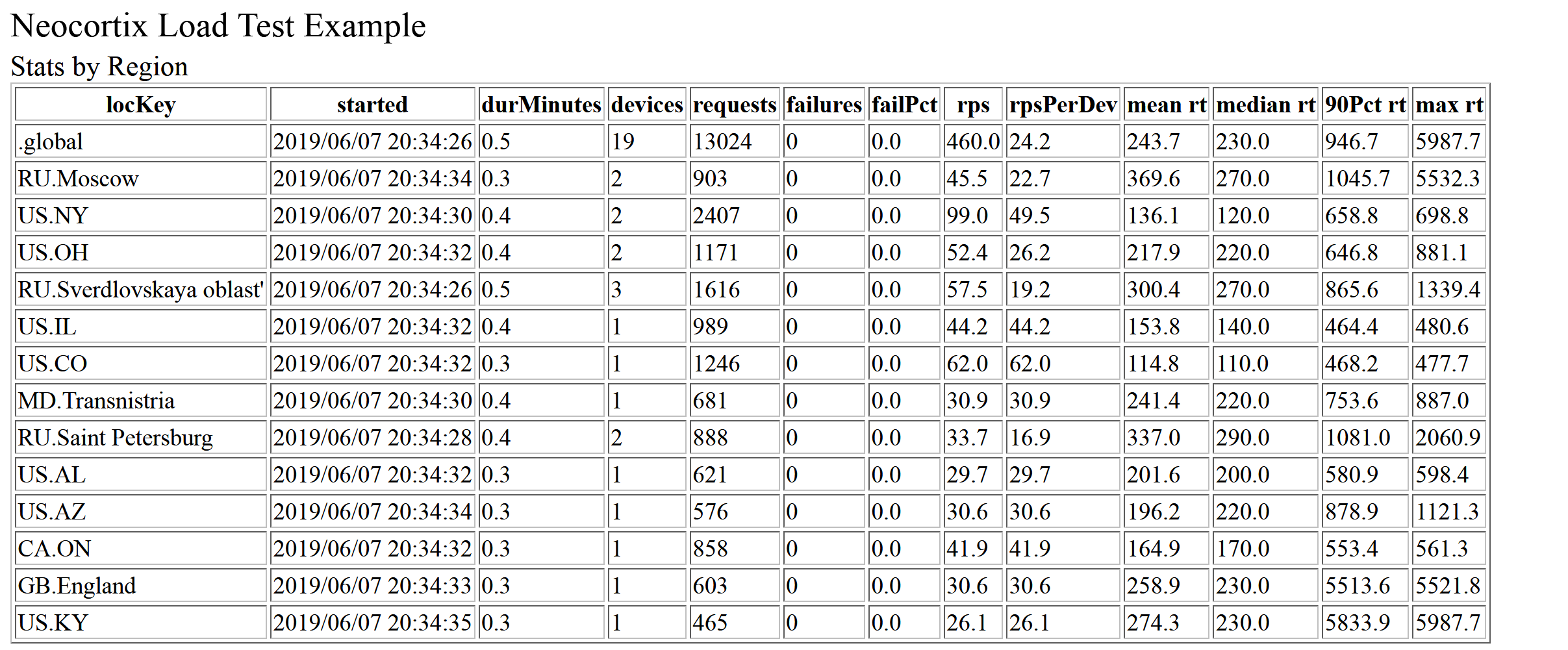

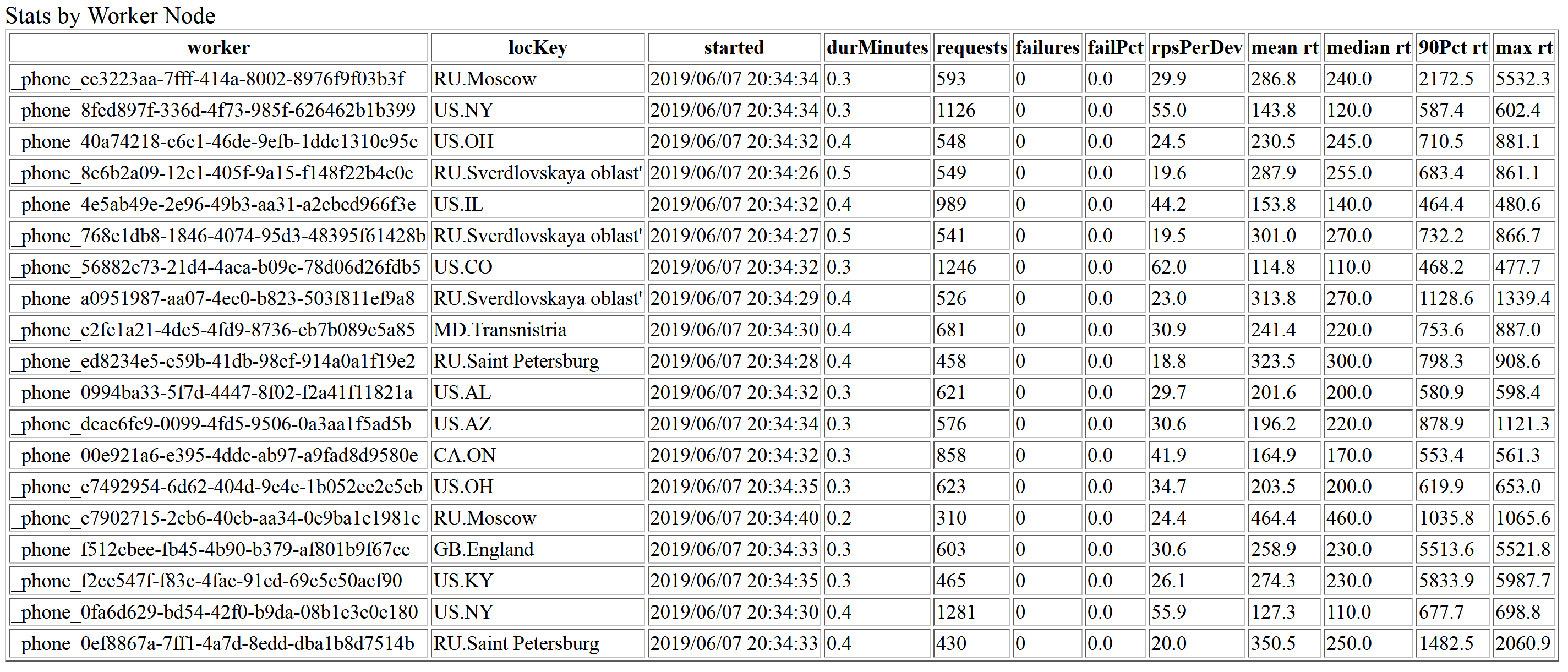

Example Output (Small Test)

Here we show an example output for a test run where 20 instances were requested, with 10 simulated users/worker.

19 of 20 workers showed up, 19 workers working

200 simulated users

Load Test started: 2019/06/07 20:34:26

Duration 0.5 minutes

# of worker devices: 19

# of requests satisfied: 13024

requests per second: 460.0

RPS per device: 24.21

# of requests failed: 0

failure rate: 0.0%

mean response time: 243.7 ms

response time range: 56.0-5987.7 ms

Example Output (Large Test)

Here is an example output from one of our large daily tests, in which 813 instances were available and requested; all 813 instances launched; 775 devices (95% of requested) installed all prerequisites and performed successfully as Locust workers. The total load delivered (with measured response times) was about 30,000 Requests Per Second for the 6-minute duration of the Load Test. The entire process took about 37 minutes.

Test Summary

2019-06-08T00:52:10.434004+00:00 testLoadtest.py

ncs launch job ID: 1fd0e196-b0e0-4e9f-b8e9-494a83c13fb8

813 devices listed as available

Requested 813 instances

320 device-replacement operations occurred

Launch started 813 Instances; Counter({'started': 813})

startWorkers found 789 good, 8 unreachable, 9 failed

runLocust

2019-06-08T01:14:19.003694

781 of 789 workers showed up, 775 workers working

peak RPS (nominal) 48.4

31438 simulated users

775 out of 813 = 95% success rate

Load Test started: 2019/06/08 01:14:17

Duration 5.7 minutes

# of worker devices: 778

# of requests satisfied: 10341697

requests per second: 30094.2

RPS per device: 38.68

# of requests failed: 39354

failure rate: 0.4%

mean response time: 476.7 ms

response time range: -149.2-200743.8 ms

2019-06-08T01:29:02.449684 testLocustSystem finished

elapsed time 36.9 minutes

Timing Summary (durations in minutes)

00:52:11 01:02:52 10.7 launch

01:02:52 01:06:53 4.0 installPrereqs

01:06:53 01:14:18 7.4 startWorkers

01:14:19 01:20:31 6.2 runLocust

01:20:31 01:21:49 1.3 killWorkerProcs

01:22:19 01:24:42 2.4 retrieveWorkerLogs

01:24:42 01:29:02 4.3 terminateInstances

00:52:10 01:29:02 36.9 testLoadtest